This chapter presents an overview of input and output plugins, video

and audio filters, and transitions. They are the most common building

blocks of OpenVIP networks and therefore deserve a special treatment.

Although they could be implemented as modules (according to the

IModule interface), there are special

interfaces to simplify the development of these plugins.

A complete list of available plugins can be obtained at any time

using the list_plugins.py Python script.

It is clear that every correct OpenVIP network must contain so-called input and output modules, i.e. modules which have no input or output connectors, respectively. An input module typically reads data from a file or another data stream; note that OpenVIP itself is flexible enough to cooperate with almost any source. An output module writes the data to a file or another destination.

The previous chapter presented an overview of modules and their

methods. We learned that a module has to implement at least four methods:

EnumConnectors,

SetStreams,

QueryRequirements and

Process. However, most input plugins have a

similar behaviour (e.g. their QueryRequirements

method returns an empty request list). It is therefore reasonable to use a

simplified interface for input plugins:

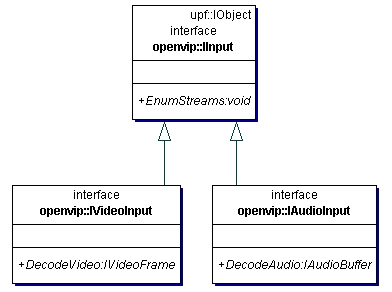

Note that IInput is an abstract

interface and that every input plugin has to implement at least one of

IVideoInput or

IAudioInput interfaces. OpenVIP core calls

the EnumStreams method to obtain a list of

streams that are available in this source.

EnumStreams returns a list of

StreamInfo object, i.e. the same type of

information that IModule::SetStreams would

return.

Depending on returned stream types, the core then queries the object

for specialized interfaces: If EnumStreams

returns one or more STREAM_VIDEO entries, the input

plugin object must implement the

IVideoInput interface and the core will use

the method DecodeVideo to ask the plugin for a

particular video frame. Similarly, if the plugin reports one or more

STREAM_AUDIO streams, the input plugin object must

implement the IAudioInput interface and the

core will use the method DecodeAudio to ask the

plugin to decode a specific block of audio.

One might now ask: How is it possible that I use an input plugin in

the same way as a module, if it doesn't implement the

IModule interface? Let's have a look at a

part of a network description file:

<module id="loader0" class="Input">

<param name="filename">input.mpg</param>

<param name="format">FFMpeg</param>

</module>The Input class is a so-called input proxy

module. It means that it is a true module (it implements the

IModule interface) and it's task is to

translate IInput methods into

IModule's methods (and vice versa). The

right input plugin is selected using the format

parameter - in our case it is FFMpeg, which means that the

Input class will call the methods of

FFMpegInput class. In general, the communication

between Input class and

IInput plugins proceeds as follows:

Input::EnumConnectors calls

IInput::EnumStreams to learn about the available

streams. For each stream it creates an output connector with a

standardized name such as video0,

audio0 etc. It then returns the list of these

connectors to the core.

The information from IInput::EnumStreams is

also used in Input::SetStreams - this method

simply copies the information about available streams to a new

StreamInfoList list and returns it to the core.

The Input::QueryRequiredData method always

returns an empty list since an input plugin has no input

connectors.

Finally, there is the Input::Process

method. It reads the requests parameter supplied by the core and for each

request it calls either IAudioInput::DecodeAudio

or IVideoInput::DecodeVideo depending on the type

of the request.

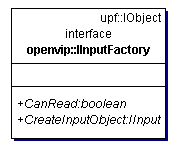

One more note about the input plugin autodetection mechanism: Each

input plugin should be accompanied with a so-called factory class which

implements the IInputFactory

interface:

The factory informs the core whether a specified file can be

understood by the corresponding input plugin. The autodetection code

instantiates all known IInputFactory

implementations and calls their CanRead method

until first of the factories returns true. After that,

CreateInputObject of this factory is called and

returned IInput object is used to load the

data from the file.

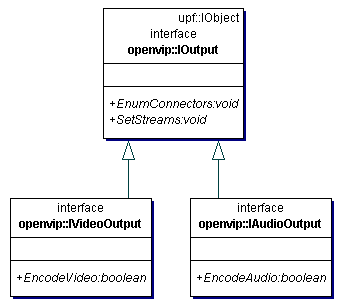

The situation with output plugins is almost the same. Such a plugin

has to implement the IOutput interface and

at least one of its specializations:

There is also the output proxy class called

Output, which translates between the

IModule's and

IOutput's methods calls.

The section Input and output

plugins contains an overview of all implemented input and output

plugins and their parameters. To develop a new input or output plugin, all

you have to write is a class which implements the

IInput or

IOutput interface (and at least one of

their specializations), respectively (and possibly add a factory for the

input plugin).

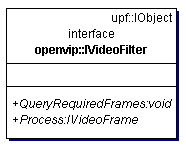

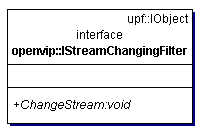

The IVideoFilter is a simplified

interface for video filter plugins:

As you already expect, there is a proxy class called

VideoFilter which translates between

IModule's and

IVideoFilter's methods. The right video

plugin is selected using the parameter videofilter

of the VideoFilter module (see the network example in Network format).

A video filter always has one input and one output connector and

therefore the VideoFilter::EnumConnectors method

returns two connectors named video0.

Most video filters don't change the video stream's parameters such

as width, height etc. That's why the

VideoFilter::SetStreams method simply copies the

input stream parameters. However, there are some video filters (such as

Resize), which need to modify the stream's parameters. These filters have

to implement the IStreamChangingFilter

interface:

The VideoFilter::SetStreams method then

calls the filter's ChangeStream method to get

information about the output stream.

To produce a single output video frame a video filter usually needs

one or more input video frames. This is the purpose of the

IVideoFilter::QueryRequiredFrames method. Its

full prototype is

void QueryRequiredFrames(in long frame, out FrameNumberList req_frames);

When a filter is asked to produce frame with number

frame, it enumerates all the frames required to fulfill

this request and stores their numbers into the

req_frames list.

The VideoFilter::QueryRequirements then

simply calls the filter's QueryRequiredFrames and

for each frame number from req_frames it creates a

Request structure with that frame number and

connector identifier video0.

The video filtering is actually performed using the

IVideoFilter::Process call:

IVideoFrame Process(in long frame, in VideoFrameList inputs);

The

VideoFilter::Process method only translates the

Request structure to a video frame number and

supplies it to filter's Process method.

There is a class called SimpleVideoFilter

which even more simplifies the development of new video filters. You may

use this class if the following statement holds: To produce output frame

with number N, the filter needs the input frame with

number N. In fact this is true for the overwhelming

majority of video filters - only special filters such as motion blur need

more frames at once.

The only thing a simple video filter has to do is to implement the

DoProcess method:

virtual upf::Ptr<IVideoFrame> DoProcess(IVideoFrame *in);

This method simply gets an input frames, processes it and returns the output frame.

This section presents an example of a simple filter which inverts images. We start with the header and class declaration:

#include <upf/upf.h>

#include "openvip/openvip.h"

#include "openvip/SimpleVideoFilter.h"

using namespace std;

using namespace upf;

using namespace openvip;

class InvertVFilter : public SimpleVideoFilter

{

protected:

Ptr<IVideoFrame> DoProcess(IVideoFrame *in);

UPF_DECLARE_CLASS(InvertVFilter)

};

UPF_IMPLEMENT_CLASS(InvertVFilter)

{

UPF_INTERFACE(IVideoFilter)

UPF_PROPERTY("Description", "Simple invert")

}

UPF_DLL_MODULE()

{

UPF_EXPORTED_CLASS(InvertVFilter)

}There is a convention in OpenVIP that a video filter class name

should end with the VFilter suffix (see also the

openvip/doc/devel/coding.txt file). If you use such

filter in a network, it is then sufficient to specify the name without the

suffix, e.g. Invert.

The UPF related statements are always the same, you just use the right class name and provide a short description of your filter using the Description property (be sure to enter this description - it is used by the GUI).

The DoProcess method itself is simple,

too:

Ptr<IVideoFrame> InvertVFilter::DoProcess(IVideoFrame *in)

{

int w = in->GetWidth();

int h = in->GetHeight();

Ptr<IVideoFrame> out = in->GetWCopy(FORMAT_RGB24);

pixel_t *dst = out->GetWData(FORMAT_RGB24);

for (int i=0; i<3*w*h; i++)

dst[i]=255-dst[i];

return out;

}We just ask for the input frame in RGB24 format and invert the

individual pixels (there are 3*w*h

of them, w*h for every colour

channel).

This filter has the disadvantage that it always asks for a RGB24

image. If the input plugin reads frames in YV12 format, each frame must be

converted before being processed. A more effective version of our filter

should first check in what formats is the frame available (using the

GetFormats method) and then invert the image in

that format.

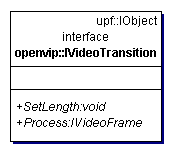

A video transition is an operation on two video streams which

produces a single output video stream. The output usually looks like a

gradual transition from the pictures of the first stream to the pictures

of the second stream. Video transition classes implement the

IVideoTransition interface:

Again, there is a proxy class called

VideoTransition which makes video transitions

behave like modules. We will not delve into the proxy implementation

details here as it is almost the same as the

VideoFilter proxy class described above. We just

note that the input connectors are always denominated

video0 and video1 and the output

connector is always video0 and that the video

transition plugin is set using the parameter

transition.

To write a new video transition, all you have to do is a class which

implemets the SetLength and

Process methods.

The SetLength method only informs the

plugin about the transitions' length in frames; it is always called before

the actual computation begins. Here is the full prototype of the

Process method:

IVideoFrame Process(in long frame, in IVideoFrame inputA, in IVideoFrame inputB);

This call asks the plugin to render frame with number frame; the

frame values range from 0 to N-1, where

N is the value obtained from

SetLength. The Process

method blends the two images inputA and

inputB together and returns the result. E.g. a simple

crossfade transition would do the following operation on every

pixel:

*dst = (pixel_t)((1-alpha)*(*src_a) + alpha*(*src_b));

where

alpha=frame/(N-1)

and src_a, src_b and

dst are pointers to the corresponding pixels.

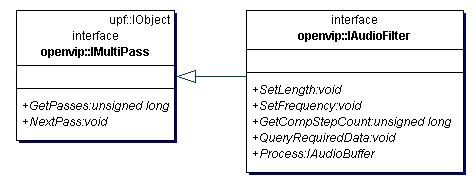

An audio filter is a class which implements the

IAudioFilter interface:

The audio processing can be performed in one or more passes; you should brush up the facts about multi-pass modules from More on modules before reading the next paragraphs.

The meaning of IAudioFilter interface

methods will be explained using the normalization filter example. The

normalization filter first scans the whole audio stream and looks for the

maximum sample absolute value. It then scales all samples in such a way

that they cover the whole 16-bit range.

It is obvious that normalization can be performed in two passes (the

first looks for the maximum, the second does the scaling); the

NormalizationAFilter::GetPasses therefore returns

2.

Here goes the first pass: We have to report the number of

computation steps. Let's say we'll search the audio stream in 64 kB

blocks. The SetLength method tells us the total

length of the audio stream. Our GetCompStepCount

method therefore returns the number of 64 kB blocks that fit in the total

length (the last block may be incomplete); we will denote this number

N.

The core (more exactly, the audio filter proxy class

AudioFilter) now calls the

QueryRequiredData method with computation step

address equal to 0, 1, ..., N-1. We reply with the

AudioAddress structure identifying the

appropriate 64 kB audio block. The core supplies that block to the

Process method; we scan it for a maximal sample

value.

We are now done with the first pass and have the maximal value. The

core calls audio filter's NextPass method to

switch it to the second pass. This is the last pass and since we don't

want our module to be terminal, the

GetCompStepCount should return 0.

The QueryRequiredData method now gets

requests for audio blocks specified using an

AudioAddress. To produce a normalized a block of

audio we need the same block from input stream; our

QueryRequiredData therefore simply copies the

AudioAddress structure. The core then supplies

that block in form of an audio buffer to the

Process method. We multiply each sample with a

constant computed after the first pass and return the processed audio

buffer.

Just a note to the AudioFilter class: The

input and output connector are both denominated

audio0 and the filter is selected using the parameter

audiofilter.

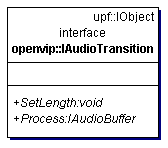

An audio transition is the analogy of video transition for audio

streams. When blending two video sequences together, one usually wants to

mix their audio tracks, too. Audio transition classes implement the

IAudioTransition interface:

Its methods are almost the same as in

IVideoTransition. Instead of frames, the

SetLength sets the transitions' length in

samples. The Process method now has the following

prototype:

IAudioBuffer Process(in long pos, in IAudioBuffer inputA, in IAudioBuffer inputB);

It has to mix two audio buffers which correspond to the position

pos in the input streams; this number ranges from from

0 to N-1, where N is the value

obtained from SetLength. A simple crossfade audio

transition would do the following operation on every sample:

*dst = (sample_t)((1-alpha)*(*src_a) + alpha*(*src_b));

where alpha is the sample number divided by

N-1 and src_a,

src_b and dst are pointers to the

corresponding samples.

The transitions' Process and

SetLength methods are again called by the

AudioTransition proxy class, which makes audio

transitions behave like modules. The input connectors (set by the

AudioTransition::EnumConnectors method) are

always denominated audio0 and

audio1 and the output connector is always

audio0. The right audio transition plugin is selected

using the parameter transition.