You already know that each network consists of so-called modules. In

this chapter you will learn how a module works and how to write new

modules. Module is a class which implements

IModule interface. So let's have a look at

the UML diagram of IModule and derived

interfaces:

The following sections present a brief introduction to these interfaces. For a complete description see the OpenVIP API reference documentation.

Imagine that you prepare a network description and submit it to the core. What does the core do?

First, it asks all the modules to enumerate their input and output

connectors. This is done using the EnumConnectors

method. Here is the full prototype:

void openvip::IModule::EnumConnectors(out ConnectorDescList in_conns, out ConnectorDescList out_conns)

The module simply assembles a list of its input connectors and puts

it into in_conns; the output connectors go into

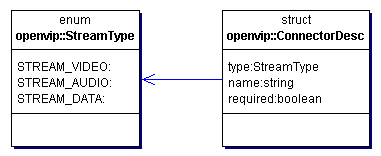

out_conns. A connector is described using a

ConnectorDesc structure:

Each connector must be given a name (e.g.

audio0, video0 etc.) and a flag

whether the module requires that the connector must be linked to another

module. In addition it is necessary to specify the corresponding stream

type. Recall that OpenVIP supports three data types: video frames, audio

buffers, and data packets (see Data

types in OpenVIP). Stream type STREAM_VIDEO

then means that the corresponding connector can transport video frames,

STREAM_AUDIO transports only audio buffers, and

finally STREAM_DATA supports only data

packets.

At this moment the core is able to link the modules and check whether the supplied network is correct, i.e. whether all required connectors have a link.

Before the computation starts, all modules have to provide a more

detailed information about the data they will produce. The is done using

the SetStreams method, which has the following

prototype:

void openvip::IModule::SetStreams(in StreamInfoList in_streams, out StreamInfoList out_streams)

The module has to create the out_streams list

containing as many StreamInfo objects as there are

output connectors. The information in StreamInfo is

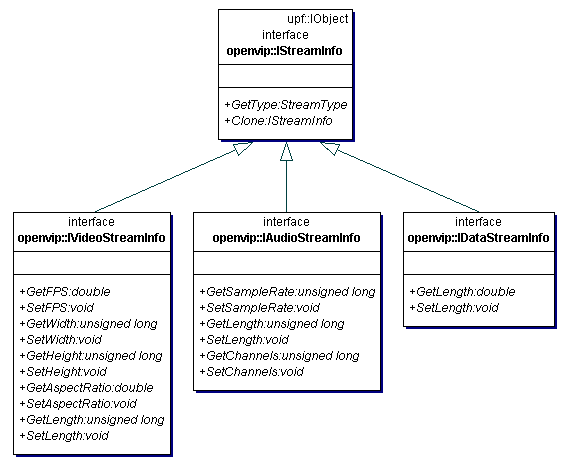

different for different stream types:

For a STREAM_VIDEO stream the module supplies a

VideoStreamInfo object with information about the

video frames it will produce including their dimensions, frame rate,

aspect ratio and the total number of frames. An

AudioStreamInfo object corresponds to a

STREAM_AUDIO stream and contains the sample rate, the

number of audio channels, and the total number of samples. A

DataStreamInfo object simply provides an

information about the length of STREAM_DATA stream in

seconds.

There arises a question now: How does a module determine its output

stream parameters if they depend on the data it will get from its input

connectors? E.g. a simple video filter such as Flip

produces video frames which are exactly of the same type as the input

video frames. The answer is that the core first asks the module which

precedes our video filter in the network about the data it will produce

(using SetStreams() again) and then supplies this

information to our video filter using the

in_streams parameter of the

SetStreams() method. This means that the input

parameter in_params contains one

StreamInfo object for each input connector. Our

video filter then simply copies the frame width, height etc. to one of

StreamInfo objects in the

out_streams list.

This procedure always gives sense - since networks cannot contain

cycles, there is always a module which doesn't have any input connectors

(these are usually the input modules which read data from files). The core

first calls SetStreams() on this module, then on

its successor etc.

At this time we collected information about all modules in the network and the computation can begin. It is driven by a scheduling algorithm, which decides what to compute at the moment (see Internals of Network Processing). The scheduler asks the modules to produce particular pieces of data.

Imagine we have a simple video filter module which reads a video

frame from input stream, performs some operation on it and passes it to

the output stream. Assume that the core asks our module to produce

videoframe number N. The module now sends a reply to

the core that in order to produce this frame it needs frame

N from the preceding module in network.

In general it could have required more input frames to produce a single output frame (consider a motion blur module which blends successive frames together). The communication between the core and the module about their requirements proceeds using the method

void QueryRequirements(in RequestList requests, out RequestList requirements);

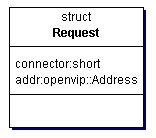

Its input and output parameters are lists of Request structures:

A request simply identifies the connector number (it is always an

output connector in case of requests parameter and

always an input connector in case of requirements

parameter) and the piece of data required (see Data types in OpenVIP for an overview

of address types).

The core calls module's QueryRequirements

method to ask it about the data required to fulfill its requests. The

module read the requests list, creates the

requirements list and returns it to the core. Now

it is the core's task to obtain the data specified in

requirements. It usually generates a request to the

preceding module etc. Note that each network must contain a module with no

input connectors; such module usually reads data from a file and therefore

has no further requirements.

Finally, after the core obtained the data specified in requirements, it supplies them to the module using the method

void Process(in RequestList requests, in DataFragmentList inputs, out DataFragmentList outputs)

The requests parameter has the same meaning

as in QueryRequirements. The

inputs list now contains the data the module asked

for. After the module processed them, it returns the output in the

outputs list.

Let's practice the theory from the previous section by a designing a

simple module, which would perform a decomposition of video frames into R,

G, B channels. The code presented here is a simplified version of the

RGBDecompose module from OpenVIP

distribution.

We start with the class declaration:

class RGBDecompose : public IModule

{

public:

void EnumConnectors(ConnectorDescList& in_conns, ConnectorDescList& out_conns);

void SetStreams(const StreamInfoList& in_streams, StreamInfoList& out_streams);

void QueryRequirements(const RequestList& requests, RequestList& requirements);

void Process(const RequestList& requests, const DataFragmentList& inputs, DataFragmentList& outputs);

UPF_DECLARE_CLASS(RGBDecompose)

};

UPF_IMPLEMENT_CLASS(RGBDecompose)

{

UPF_INTERFACE(IModule)

}As you can see, it is sufficient to implement only the four methods

from the IModule interface. The UPF library

learns about the existence of our class through the

UPF_DECLARE_CLASS and

UPF_IMPLEMENT_CLASS statements. We usually want to

build the code in form of a dynamic library and therefore we use the

statement

UPF_DLL_MODULE()

{

UPF_EXPORTED_CLASS(RGBDecompose)

}All we have to do now is to write the code for the four methods. The

EnumConnectors method will look like this:

void RGBDecompose::EnumConnectors(ConnectorDescList& in_conns, ConnectorDescList& out_conns)

{

in_conns.push_back(ConnectorDesc(STREAM_VIDEO, "video0", true));

out_conns.push_back(ConnectorDesc(STREAM_VIDEO, "video_r", false));

out_conns.push_back(ConnectorDesc(STREAM_VIDEO, "video_g", false));

out_conns.push_back(ConnectorDesc(STREAM_VIDEO, "video_b", false));

}The code says that our module has an input connector called

video0 of type STREAM_VIDEO -

this is the stream for reading input video frames. There are also three

output connectors called video_r,

video_g, video_b of type

STREAM_VIDEO. The images corresponding to R, G, B

channels of input frames will go there.

The SetStreams method is simple,

too:

void RGBDecompose::SetStreams(const StreamInfoList& in_streams, StreamInfoList& out_streams)

{

Ptr<IStreamInfo> info = in_streams[0];

out_streams.push_back(info);

out_streams.push_back(info);

out_streams.push_back(info);

}Recall that the SetStreams method is

responsible of setting the output video streams' width, height, aspect

ratio etc. However, our module won't change any of these properties and it

is therefore sufficient to set the output streams to match the input

stream exactly.

Here comes the QueryRequirements

method:

void RGBDecompose::QueryRequirements(const RequestList& requests, RequestList& requirements)

{

for (RequestList::const_iterator i = requests.begin(); i != requests.end(); i++)

{

Request r(*i);

r.connector = 0;

requirements.push_back(r);

}

}The core can ask for a video frame with number

N from any of the three output streams. The code

above says that to fulfill this request the module first needs to get the

frame with number N from its input stream.

The actual RGB decomposition is done in the

Process method:

void RGBDecompose::Process(const RequestList& requests, const DataFragmentList& inputs, DataFragmentList& outputs)

{

Ptr<IVideoFrame> in(inputs[0]);

Ptr<IVideoFrame> out;

unsigned w = in->GetWidth();

unsigned h = in->GetHeight();

const pixel_t *in_data = in->GetData(FORMAT_RGB24);

for (RequestList::const_iterator i = requests.begin(); i != requests.end(); i++)

{

out = upf::create<IVideoFrame>();

out->Create(w, h, FORMAT_RGB24);

switch (i->connector)

{

case 0:

copy_channel_R(in_data + 0, out->GetWData(FORMAT_RGB24), w * h);

break;

case 1:

copy_channel_G(in_data + 1, out->GetWData(FORMAT_RGB24), w * h);

break;

case 2:

copy_channel_B(in_data + 2, out->GetWData(FORMAT_RGB24), w * h);

break;

}

outputs.push_back((IDataFragment*)out);

}

}First we ask the input video frame instance to get the image data in

RGB24 format. Then we create a new video frame with the same dimensions

and copy the requested channel into it. The

copy_channel methods could look like this:

void copy_channel_R(const pixel_t *in, pixel_t *out, size_t len)

{

for (size_t i = len; i > 0; i--, in += 3, out += 3)

{

out[0] = *in;

out[1] = 0;

out[2] = 0;

}

}(Similarly for copy_channel_G and

copy_channel_B).

That's all - just compile the new code and you will be able to use

RGBDecompose in a network.

You might have noticed that the scheme described in previous

sections doesn't work well with terminal modules, i.e. modules with no

output connectors (recall that QueryRequirements

contains requests on output connectors). Imagine a terminal module which

saves audio and video data to a file; how does the core tell the module to

save a group of frames or a block of audio? The answer is that it doesn't.

It is reasonable to suppose that the output module knows better the order

in which data should be written (note that different output formats may

employ different interleaving schemes).

The whole thing with terminal modules is solved using the idea of

computation steps. Terminal module classes have to

implement the ITerminalModule interface,

which is derived from IModule and in

addition contains the method

unsigned long GetCompStepCount();

A computation step corresponds to a logical unit of output data. The module is free to interpret computation step any way it wishes. For video output module, it may be equal to frame number; audio and video output may interpret it as e.g. 1 second (so that it can easily interleave audio and video data).

Before the whole network processing starts, each terminal module has

to decide about the number of computation steps it needs to perform. The

core then calls QueryRequirements and

Process using the

ADDR_COMP_STEPS addressing scheme (see Data types in OpenVIP) repeatedly with

computation step number equal to

0,1,2,...,GetCompStepCount()-1.

It is possible that a module is not able to compute its outputs in a

single pass; it might e.g. first want to scan the whole input stream

before it decides what to do. Such modules have to implement the

IMultiPassModule interface (see the UML

diagram at the beginning of the chapter).

Before the computation starts, each multipass module is asked for

the number of passes using the GetPasses method;

let us denote this number N. Since the module doesn't

produce any output during the passes 1, ..., N-1, it

always behaves as a terminal module, which means that the core calls its

QueryRequirements and

Process methods using the computation scheme (see

the previous section). Each of the passes 1, ..., N-1

may consist of a different number of computation steps.

After one pass finished, the core calls the

NextPass method and continues with the next pass.

A multipass module may be terminal in the last pass (e.g. a multipass

output module), but is not required to (e.g. a multipass audio filter) -

its GetCompStepCount() then returns 0 in the last

pass.

You may object that the whole thing with multipass modules seems

weird - if a module first needs to scan all video frames from a file, why

doesn't it use the ordinary module's

QueryRequirements method with requirements for

all frames? This would do the trick for files with only few video frames.

However, it is impossible to ask for hundreds or thousands of frames at

once. A well-behaved module never requires extraordinary large amount of

data and uses the multipass mechanism instead.