We have already introduced the concept of networks, modules and connectors. To be able to understand how a module works, we need to learn about data types that the modules process. For example, to be able to connect different video filters in a single network it is necessary that they use a common video frame format.

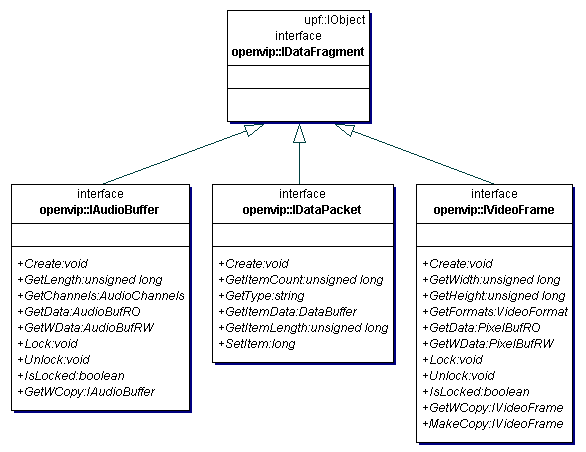

The data types supported by OpenVIP core are all derived from a base

interface called IDataFragment:

The diagram shows that there are three derived interfaces:

IVideoFrame,

IAudioBuffer and

IDataPacket. The next sections briefly

introduce each of them. For a detailed description see the OpenVIP API

reference documentation.

The IVideoFrame interface provides a

way to create new video frames and to convert them between different

formats. The methods of IVideoFrame

interface are implemented in openvip.VideoFrame

class.

It is a common wisdom that an uncompressed digital image may be stored in formats such as RGB, RGBA, YUV etc. The RGB formats are widely used in still image files such as JPG, PNG. On the other hand, the YUV formats are common in video files such as AVI or MPEG due to the fact that they consume less memory than RGB. The modules in OpenVIP network might need to use both RGB and YUV formats: Where input plugins produce frames depending on the input file format, a RGB decomposition filter surely prefers to get an image in RGB format.

The solution is that a module can ask the

VideoFrame class to convert the frame in a

specified format. Moreover, the videoframe instance can hold the same

videoframe in different formats at the same time. A videoframe in specific

format is obtained using the GetData and

GetWData calls. One uses the former to get a

videoframe only for reading, while the latter returns data which can be

modified.

Apart from conversion, IVideoFrame

provides methods for creating new frames and for locking and unlocking a

frame (for the internal use of OpenVIP). A locked frame cannot be obtained

using the GetWData call; instead, you have to

make a copy of the frame using the GetWCopy

method.

The IAudioBuffer interface

encapsulates data and methods which are neccessary to store and retrieve

blocks of audio samples. These samples are always represented by 16-bit

signed integer values using the machine-specific endianity (although some

input modules read 8-bit samples, they convert them to 16-bit format). It

is possible to store more than one audio channel in a single audio buffer;

the samples for individual channels are then interleaved.

The only class which implements the

IAudioBuffer interface is called

openvip.AudioBuffer. It provides methods which are

similar to the IVideoFrame's methods -

creating empty audio buffers, accessing them in read-only or read-write

mode and locking the buffer.

The data packets contain data which are neither video frames nor audio samples. For example an input module which could read a file with subtitles can make them available to other modules by putting them into data packets. The graphical frontend to OpenVIP uses data packets to visualize audio tracks - this visualization module reads audio samples and returns some numerical values in form data packets.

To be able to recognize data packets of a certain type, packet contains a type identifier (string). Apart from this identifier a data packet can carry an arbitrary number of items. The items are accessed using their numbers and can contain arbitrary data.

The methods of IDataPacket interface

are implemented in openvip.DataPacket class. They

include the basic operations such as creating new packets, accessing

individual items and modifying their content.

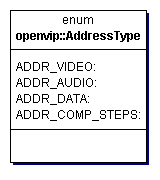

It is often necessary to be able to identify the data a module is interested in. For example, the scheduler needs to identify the data a module should process at the moment. To fulfill this request, the module may ask the core for another piece of data and so on. There are different addressing schemes for different data types:

A video address (ADDR_VIDEO address type) is

simply a number identifying a particular video frame.

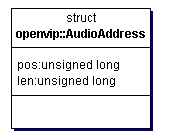

A block of audio (ADDR_AUDIO address type) is

determined by an AudioAddress structure, which

describes the audio block start (in samples from the beginning) and its

length (in samples, too):

The ADDR_DATA address type corresponds to a

group of data packets of a given type. It is represented using a

DataAddress structure, which contains the type

identifier and the time interval specification (its beginning and length

in seconds):

Finally, there are so-called computation step addresses (type

ADDR_COMP_STEP) which hold a single number

identifying the computation step. The concept of computation steps will be

explained in More on

modules.